Date: 16 December 2025

The Evolution of Social Engineering in the AI Era

Historically, social engineering succeeded because humans trust familiar patterns. AI-generated content now imitates those patterns with precision. Instead of generic scams, attackers generate targeted instructions that match internal writing styles, project timelines, and organizational terminology scraped from public sources.

The result is a new attack surface. AI-supported phishing, synthetic requests, and mixed media deepfakes bypass many of the cues employees previously relied on to detect threats. When the content appears credible, the psychological advantage shifts to the attacker.

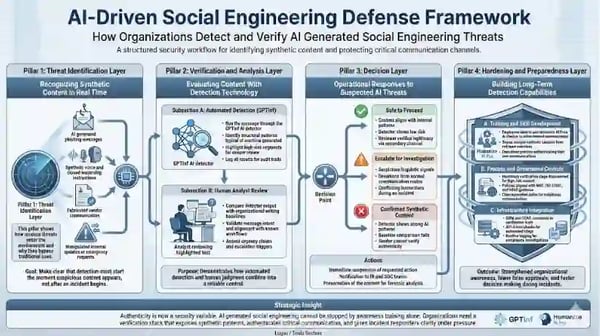

In this environment, organizations need verification tools that expose non-human patterns, highlight inconsistencies, and support leaders who must approve high-risk communications.

The Deepfake Threat Landscape

Deepfakes and synthetic content now appear across several categories of cyber incidents. Each category alters the attack path and influences how teams need to respond.

AI-Generated Phishing Emails

Attackers use large language models to craft phishing emails that avoid spelling errors, mimic corporate tone, and reference real business functions. These emails often bypass traditional detection because the text is new, not copied.

Synthetic Voice and Video Attacks on Executives

Executives are now impersonated through high-quality audio and video deepfakes. Attackers replicate leadership voices to request fund transfers, share “updated” vendor information, or instruct teams to bypass normal controls during time-sensitive situations. These attacks are especially dangerous during ongoing incidents when staff are already under pressure.

New Forms of Business Email Compromise

Business email compromise used to depend on access to a real mailbox. AI-generated impersonation expands the model. Attackers no longer need complete access if they can produce content that appears legitimate enough to trigger a response.

Real World Indicators

Several financial institutions and multinational teams have reported attempts involving synthetic instructions, deepfake calls, or AI-written procurement messages. The consistent pattern across these incidents is simple. Employees were not misled because they were careless. They were misled because the content looked structurally accurate.

Deepfake resilience now requires more than awareness. It requires verification capabilities built into daily workflows.

Content Verification as a Security Control

Content verification technology gives organizations a structured way to evaluate suspicious communication. It helps teams identify signals that indicate AI involvement and provides an audit trail for decisions made during high-pressure situations.

Using an AI Detector in Security Workflows

A modern AI detector like GTPinf becomes part of the security stack in the same way endpoint tools and email filters became standard over the past decade. Analysts use detectors to review questionable text and identify patterns that statistically align with machine-generated content. This is not about proving intent. It is about identifying anomalies.

High-value applications include:

- Reviewing unexpected requests from leadership

- Validating vendor or partner communication during onboarding

- Screening incident-related instructions that deviate from known procedures

- Supporting SOC analysts who must triage large volumes of inbound reports

Technical Detection Mechanisms

Detectors analyze lexical structure, token distribution, and pattern regularities that are unlikely to occur in human-written content. While attackers can attempt to mask these patterns, detection still exposes unusual linguistic signatures that warrant escalation.

Integration With Security Infrastructure

Verification tools can integrate with SIEM platforms, SOAR workflows, and secure communications systems. This ensures suspicious items are logged, reviewed, and tied to incident records. AI detection becomes a routine checkpoint rather than an optional step.

Automated and Manual Review Balance

Automation surfaces candidates for review. Human judgment determines the final action. This balance aligns with NIST and ISO 27001 principles, which emphasize layered controls and documented decision paths.

Building Organizational Detection Capabilities

The goal is not just to run checks. It is to build a workforce that understands how synthetic content behaves and how to verify it under operational constraints.

Training Teams to Use an AI Checker Effectively

A structured training program includes regular use of a trusted AI checker. Employees learn how to interpret flagged sections, validate requests, and rewrite internal communication so it reflects real organizational knowledge. This reduces the risk of legitimate internal messages being mistaken for AI-generated threats.

Creating Verification Protocols

Organizations define clear steps for:

- When a message must be checked

- How results are interpreted

- Who has approval authority during high-risk situations

These procedures align with NCSC guidance, which stresses the need for verification controls in modern threat models.

Establishing Baseline Procedures

Teams need reference samples of authentic internal communication. Baselines help detectors and human reviewers spot deviations that indicate impersonation attempts or manipulated text.

Red Team Testing With Synthetic Content

Red team exercises now include AI-generated phishing chains, synthetic leadership messages, and manipulated documents. This helps organizations test detection procedures under realistic scenarios.

Integration With Incident Response Planning

Incident response teams are already trained to handle credential theft, system compromise, and data exposure. What most teams are not prepared for is the introduction of AI-generated instructions, synthetic leadership messages, or manipulated evidence during an active event. These elements create confusion, slow down containment, and increase the likelihood of operational mistakes.

Verification technology gives organizations a structured way to validate communications during an incident, which is critical when attackers attempt to redirect responders or impersonate authority figures.

Adding AI Threat Scenarios to Tabletop Exercises

Cyber tabletop exercises should now include synthetic communication as part of the scenario design. Examples include:

- A deepfake voicemail from an executive instructing teams to bypass MFA

- AI-generated supplier notices requesting emergency payment rerouting

- Fabricated status reports meant to mislead leadership during a breach

These scenarios help organizations evaluate how well their teams recognize manipulated content under time pressure.

Updating Incident Response Playbooks

IR playbooks should specify when verification must occur. For example:

- Any request for financial action must be checked

- Any instruction that deviates from known escalation paths must be verified

- Any unexpected leadership message during an incident must be reviewed by security before action

These updates align with NIST IR categories focused on preparation, detection, and containment.

Detection and Verification Steps in IR Workflows

Teams incorporate verification as a required control:

- Identify suspicious communication

- Run an AI detection scan through GPTInf

- Compare language with internal baselines

- Escalate anomalies to the incident commander

- Log verification results into the IR system

This ensures that every decision has a documented rationale.

Communication Protocols for Suspected AI Attacks

When a message appears manipulated, responders need a clear path:

- Stop action until verification is complete

- Validate through secondary channels such as direct phone contact

- Notify leadership if synthetic content is confirmed

- Preserve communication logs for forensic review

This avoids operational confusion and supports compliance requirements.

Training Program Development

Security awareness programs need to evolve from simple phishing education to full-spectrum content authenticity training. Staff must learn how AI-generated material behaves and how verification fits into their job responsibilities.

Security Awareness for AI-Generated Threats

Training includes:

- How to identify unusual linguistic patterns

- How attackers customize AI prompts based on open source intelligence

- How AI-generated communication attempts to mimic internal structure

Employees learn practical indicators rather than relying on outdated heuristics.

Executive Briefings on Deepfake Risks

Executives are high-value targets. They require specialized training on:

- How attackers clone their voice or writing style

- How to authenticate their own communications

- How to respond when others report suspicious messages attributed to them

This creates an informed leadership layer rather than a vulnerable one.

Hands-On Verification Exercises

Teams practice using AI detection and checking tools in realistic scenarios. They evaluate incoming messages, verify authenticity, and document results. This builds operational confidence.

Continuous Learning Approaches

Threat patterns evolve quickly. Organizations maintain relevance by:

- Rotating new deepfake examples into regular training

- Updating verification procedures quarterly

- Running periodic cross-team simulations

This keeps skills aligned with modern attack methods.

Best Practices for Cybersecurity Teams

Strong verification practices require consistent structure and measurable controls. Cybersecurity teams can follow these principles to build resilient defenses against AI-driven manipulation.

Multi-layered Verification Strategies

A single tool is not enough. Effective strategies combine:

- AI detection for identifying machine-generated patterns

- Human analysis for context and intent

- Secondary authentication channels for confirmation

- Policy-driven controls for escalation

This layered approach reflects ISO 27001 and NCSC expectations for defense in depth.

Technology Stack Recommendations

A practical verification stack includes:

- GPTInf as the AI detection engine for text-based threats

- Humanize AI Pro as the AI checker that restores organizational voice in legitimate internal communication

- Email and communication gateways integrated with verification APIs

- Logging pipelines to capture verification data for audits

This stack gives both speed and traceability.

Policy and Procedure Documentation

Policies define:

- When checks must occur

- Who approves high-risk communications

- How results are stored

- How anomalies influence incident escalation

Clear documentation creates organizational alignment and removes ambiguity.

Measuring Detection Effectiveness

Teams track:

- Detection rate across training scenarios

- Reduction in false approvals

- Time saved during IR decision-making

- Employee accuracy during verification exercises

These indicators help refine training and improve control effectiveness over time.

Conclusion: The Future of AI Threats in Cybersecurity

AI is not only accelerating legitimate work. It is accelerating attacker capability. Deepfakes, synthetic content, and AI-generated instructions create a threat environment where traditional awareness training is not enough. Organizations need structured verification systems that can detect manipulation, authenticate communication, and support high-stakes decisions.

A verification stack built around a reliable AI detector like GPTInf and an operational AI checker like Humanize AI Pro gives organizations that advantage. These tools help teams identify synthetic patterns, restore authentic communication, and maintain control during incidents.

The next step is straightforward. Define the document types where authenticity matters, integrate verification into daily operations, and build training programs that reflect real-world attack models. Organizations that adopt these practices now will be significantly better prepared for the next generation of AI-driven threats.